Neural Network Online

The neural network calculator learn the relationship between the inputs and the outputs, predict new data, and generate the network plot.

Data: use Enter as delimiter; you may change the delimiters on 'More options'.

The neural network calculator will train on the rows that contain both input data (X) and output data (Y), and run the network on the rows that contain only input data (X).

Neural Network Calculator

Online neural network calculator with an advanced plot generator. Supports feed-forward, RNN, LSTM, and GRU architectures.

What is a neural network? (Machine Learning)

You may think of a neural network as a black box that receives data from the input neurons (X). The data flows through the neural network and produces a result at the output neurons (Y).

In some cases, you may think of a neural network as similar to regression, but neural networks can be used to solve more complex problems or may achieve better results for certain tasks.

There are many possible structures for neural networks, including the number of layers between the input and output (hidden layers), the function used to transmit data between two neurons (activation function), and more.

Any neuron, except for the input neurons, receives data from several other neurons, but it does not treat each neuron equally. It processes each signal according to the weight assigned to each connection.

To make the network effective, we need to train it with correct input and output data. The learning process determines the weights of each connection.

After the training process, we can use the network to test its quality and to predict the output based on input data.

Examples:

- Handwritten digit recognition: The image of a character contains 64×64 pixels, which are represented by

4,096 input neurons. The output is a single categorical variable representing an ASCII character, selected from 128 possible characters, and is represented by128 output neurons.

Artificial Network Neuron

An artificial network neuron is a simplified model of a biological neuron used in neural networks.

It is a mathematical construct that simulates how a neuron processes inputs to produce an output.

An artificial neuron receives inputs and produces an output through the following components:

- Input

If the neuron is in the first hidden layer, the input comes from the input layer (x1, x2, ..., xn).

If the neuron is in a deeper hidden layer, the input comes from the previous hidden layer. - Linear Combination Function – a function that computes a weighted sum of the input values along with a bias.

- Weights – the strength of the connections from input neurons.

- Bias – a constant value that allows the neuron to produce an output even when all inputs are zero.

It is the y-intercept in the linear equation. The bias does not depend on the input.

- Activation Function – a non-linear function that determines the output passed to the next layer based on the result of the linear combination.

See the activation functions supported by this calculator. - Output – the final result after applying the activation function.

If the neuron is not in the last hidden layer, the output goes to the next hidden layer.

If it is in the last hidden layer, the output goes to the output layer.

Feedforward Neural network example

The network contains 1 input layer, 2 hidden layer, and 1 output layer.

Fill "4,4" in the 'Hidden Layers' field.

Recurrent Neural network example

The network contains 1 input layer, 1 hidden layer, and 1 output layer.

Fill "5" in the 'Hidden Layers' field.

How to use the neural network calculator?

Use the neural network calculator when the problems are complex and involve non-linear relationships

Enter the entire data, input (X) and output (Y) , for the training process, and only input data (X) for the inference process.

Data

- Training data (X, Y) – contains both input and output data used to train the network.

- Input data (X) – contains only input data; the output is not provided.

Processes

When you press 'Calculate', the following processes may be performed:

- Training – if training data is available, the calculator will train the network and save the trained model under the specified 'Network name'.

- Loading – if training data is not available, the calculator will attempt to load a saved network using the 'Network name'.

- Inference – the calculator uses the trained network to predict the output based on the input data.

How to enter data?

- Enter raw data directly - usually you have the raw data.

a. Enter the name of the group.

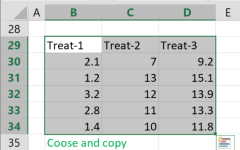

b. Enter the raw data separated by 'comma', 'space', or 'enter'. (*you may copy only the data from excel). - Enter raw data from excel

Enter the header on the first row.

- Copy Paste

- a. copy the raw data with the header from Excel or Google sheets, or any tool that separates data with tab and line feed. copy the entire block, include the header .

- Paste the data in the input field.

- Import data from an Excel or CSV file.

When you select an Excel file, the calculator will automatically load the first sheet and display it in the input field. You can choose either an Excel file (.xlsx or .xls) or a CSV file (.csv).

To upload your file, use one of the following methods:- Browse and select - Click the 'Browse' button and choose the file from your computer.

- Drag and drop - Drag your file and drop it into the 'Drop your .xlsx, .xls, or .csv file here!' area.

Now, the 'Select sheet' dropdown will be populated with the names of your sheets, and you can choose any sheet. - Filter Data

When using the 'Enter data from Excel' option, you can filter the data by clicking the following icon above the header:

You may select one or more values from the dropdown. Please note that the filter will include any value that contains the values you choose.

- Copy Paste

Options

- Input variables(X):

Numerical output - the number of output neurons equals the number of variables. - Output variables (Y):

Numerical output - the number of output neurons equals the number of variables.

Categorical output - the number of output neurons equals the number of categories in all the variables . - Hidden Layers:

The configuration of neuron layers between the input and the output.

More complex problems require more hidden layers.

More hidden layers require more training time.

You should start with one hidden layer and increase it gradually if needed.

Similarly to regression, too many layers might cause overfitting.Enter how many neurons each layer should have. Separate numbers with commas (e.g., 3,5,2).Examples:

3 - One layer with 3 neurons.

5,4 - Two layers: the first with 5 neurons, the second with 4 neurons. - Scaling:

When the data is numerical, scaling it before training the network may lead to better performance.- Standardize - Transforming the data to have a mean of 0 and a standard deviation of 1.

- None - don't transform the data.

- Models:

- Feedforward Neural Network - Feedforward network with backpropagation for classification and regression.

- Recurrent Neural Networks (RNN) -

For sequential data when the next state depends on the current state.

get the previous outputs as input for the next data row.

For example text, speech, or time series, where the order of elements is important.

Example: Train a network to predict the next number in a simple sequence.

input: 1 output: 2.

input: 2 output: 3.

input: 1 output: 2.- RNN - Recurrent Neural Network - simple with short memory, say it doesn't consider elements that are too far away behind

- LSTM - Long Short-Term Memory - similar to

- GRU - Gated Recurrent Unit - variation of LSTMs, aiming to achieve similar performance with a simpler structure and fewer parameters.

- Activation Function:

The function that determines the output a neuron will pass to the next layer, based on the input it receives from the previous layer.- Sigmoid

- Domain:: All real numbers,

(-∞, +∞) - Range:: Positive real numbers between 0 and 1,

(0, 1) - Used in the output layer for binary classification

- Avoid in hidden layers due to vanishing gradients

- Domain:: All real numbers,

- ReLU (Rectified Linear Unit) ReLU(x) = max(0, x).

- Domain:: All real numbers

(-∞, +∞) - Range:: Positive real numbers

[0, +∞) - Great for hidden layers, fast and simple

- Can suffer from “dying ReLU” where some neurons stop updating.

- Domain:: All real numbers

- Leaky ReLU - Like ReLU, but allows small negative outputs to avoid dead neurons. Good for deeper networks where ReLU struggles.

- Tanh

Output is Zero-centered, which can help learning.- Domain: All real numbers,

(-∞, ∞) - Range: All real numbers between -1 and 1,

(-1, 1) - Used in some RNNs, but still suffers from vanishing gradients.

- Domain: All real numbers,

- Sigmoid

- Learning Rate:

The learning rate is a number between 0 and 1 that determines the size of the gradient update in each iteration. The default is 0.3. It controls how much the weights are adjusted during each step of the training process. A smaller learning rate means smaller steps in gradient descent, which can lead to more precise convergence but may require more iterations. A larger learning rate means larger steps in gradient descent, which can lead to faster training but may lead to local minimum. - Decay Rate:

The decay rate refers to the reduction of the learning rate over time. A large learning rate allows faster training, while a small learning rate offers more accuracy by reducing the chance of overshooting the optimal point. Gradually decreasing the learning rate can combine the benefits of both approaches — it starts fast and becomes more accurate as it slows down toward the end of the optimization process. In each iteration:

Learning Rate = Learning Rate * Decay Rate. - Momentum:

Momentum in neural networks is a method to improve the learning process by using the weight increment from the previous iteration to calculate the current weight update. It can help the network converge faster and prevent it from getting stuck in a local minimum. It is similar to physical momentum, which may help a ball escape a small hole and continue rolling to the bottom. - When to stop the training: The network will stop training whenever one of the three criteria is met: the training error has gone below the threshold (default 0.005), the max number of iterations (default 20000) has been reached, or the learning time has reached the maximum.

- Maximum Iterations: On each iteration, the algorithm changes the weights of the neurons in a direction that will decrease the error of the training set. A higher number of iterations leads to better results until it reaches the lower error rate. In this case, more iterations will not lead to a better result.

- Error Threshold: The acceptable error percentage from training data (between 0 and 1).

- Maximum run time: Limits the calculation time, even if the number of iterations does not reach the 'Maximum iterations' or the epsilon does not reach 0.

- Network name: When you train the network, it will save the results in your browser's local storage under the name you enter here. When you clear the cache, the results will be deleted.

- Excel pagination display - Specifies the number of rows per tab. When you load a large Excel file, it will be displayed across multiple tabs.

- Rounding - how to round the results?

When a resulting value is larger than one, the tool rounds it, but when a resulting value is less than one the tool displays the significant figures.